Behind the Line: The problems with “The Algorithm”

I don’t usually want to get political in these pieces, but I was already planning to follow up the last article on this topic, then Twitter just walked right into the target area on their own. So yeah, that’ll come up here.

Last week I talked about why The Algorithm is something that tech companies strive for in order to solve certain logistical problems. The short version is that if it works right, it scales up infinitely. However, there are two big problems with that.

1. You can’t fit evolving behavior into an algorithm.

Humans are not deterministic machines. We are unpredictable.

Yeah, there’s a debate about the existence of free will, but for the purposes of this piece, human behavior is so complex we can consider it unpredictable, and therefore functionally non-deterministic.

We can’t predict human/user behavior, so we can’t be certain how an algorithmic system designed to assist content curation will be used. Perhaps it’s fairly benign, like Amazon suggesting other items you might like by comparing your shopping cart to patterns of other shoppers. Perhaps it is in a potentially questionable way like News Aggregation, giving you what it thinks you want and drowning out other important stuff you might also want. That same point applies to Youtube suggestions. Then there’s the fact that…

2. People will try to game any system in front of them

Automatic systems can be gamed and exploited, such as the Steam Greenlight system. And this brings us back to Twitter, who have some notable problems with their systems.

As a social network, signal boosting is a thing. Because of that, people will pay to get more followers, more forwards, retweets, and likes. This will increase a message’s exposure, or the impression of popularity. It can even construct a bogus echo-chamber of like-minded “people” that drown out other points. And this isn’t even generating any money for Twitter! This is just providing a platform for other people to make money with bots, or other simulated users. For those who didn’t know, you can even check up on who has real users following them with this tool: https://www.twitteraudit.com/

On top of that, these systems need to have certain rules to govern not only behavior of the system, but what is considered acceptable by the users. You know, the “Terms of Service”. But…

3. Uniform application of rules is critical!

Here’s where we get back to Twitter again. Rose McGowan’s account was suspended, apparently after giving out someone’s personal contact information without consent. At least that was the explanation given. The problem is that this really comes across like a punitive move in the wake of her speaking out against sexual assault, not only with the timing, but the greater context. There are many people who report that they’ve been harassed, impersonated, or had their contact information shared without their consent and Twitter took no actions in those cases. I even reviewed the Twitter Terms of Service, and violations of them are rampant with no repercussions. And yet if you change your location to Germany, where certain toxic behavior is illegal (your agreement or disagreement with that law is irrelevant to this point) those users are blocked. That implies that there is an infrastructure of some sort in place that isn’t used uniformly. Remember that users who violate the TOS have no actions taken against them, even if they are manually reported.

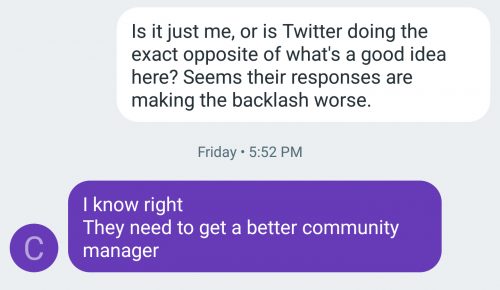

This disconnect is highly conspicuous. Especially given the @Jack and @TwitterSafety response to the Rose McGowen situation.

👇🏼 thread. We need to be a lot more transparent in our actions in order to build trust. https://t.co/7T6aliOXmG

— jack (@jack) October 12, 2017

Considering our recent conversations, I asked Chris about this.

On a similar vein, there’s Youtube strikes and takedowns triggering automatically. Active takedown requests are also abused. That’s gaming the system, but exposes another catch…

4. The people creating algorithms are not neutral

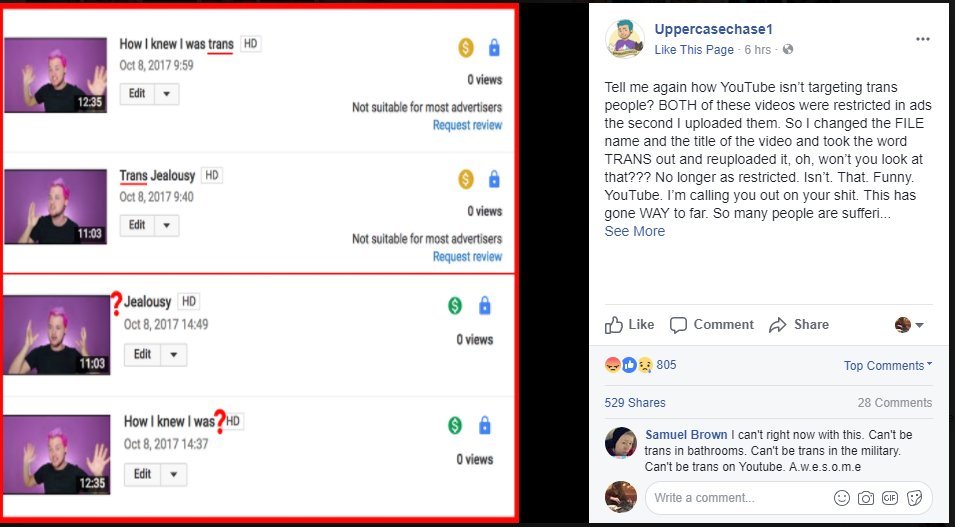

It can be overt, or it could be so subtle that no one notices, but people who create algorithmic solutions to problems can insert into the logic their own biases or prejudices. One example I came across has to do with what Youtube considers to be appropriate to monetize.

This example is about as 1 – 1 as you can get. Including the term “trans” changes something in the system. As a viewer, agreement or comfort with the topic is irrelevant. The platform is NOT neutral. The platform decides what can and can’t be monetized.

Human oversight is necessary

The fact of the matter is that human oversight is a necessity on a high level. The algorithm, right now, isn’t a solution. It may never be a solution. It is a tool, one that can magnify the effectiveness of admins, or curators, but those humans will continue to be necessary. They will need to investigate unusual behavior and unwelcome results on the platform for win they are responsible. Does this scale up infinitely? No, it does not. Investment in this is necessary. It’s not easy. It will hurt. Oversight itself is prone to issues with neutrality. (How many have seen a forum mod go on a power trip?) There is no perfect solution, but one reason for that is that we people are not automatons and will behave in unpredictable ways.

PS – A fun tip. If you see someone on social media who you think is saying something weird, try looking at their history. I find that most of the ones I see never speak or interact about any other topic. So, unless they’re someone who is so single mindedly passionate about a topic that they devote their entire social media presence to repeating the same point on the same topic, they’re a bot.

Kynetyk is a veteran of the games industry. Behind the Line is to help improve understanding of what goes on in the game development process and the business behind it. From “What’s taking this game so long to release”, to “why are there bugs”, to “Why is this free to play” or anything else, if there is a topic that you would like to see covered, please write in to kynetyk@enthusiacs.com or follow on twitter @kynetykknows

Leave a Reply